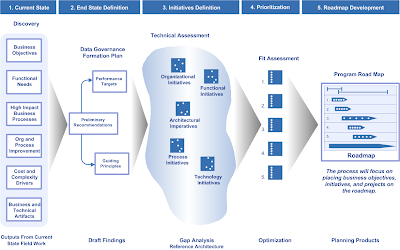

1) Develop a clear and unambiguous understanding of the current state

- Business Objectives

- Functional needs

- High impact business processes

- Current operating model

- Cost and complexity drivers

- Business and technical assets (Artifacts)

2) Define desired end state

- Performance targets (Cash flow, Profitability, Growth, Customer intimacy)

- Operating Model Improvements

- Guiding principals

3) Conduct Gap Analysis

- Gap closure strategies

- Organizational

- Functional

- Architectural (technology)

- Process

- Reward or economic incentives

The following diagram illustrates a sample index or collection of the findings focused across the four architecture domains (Business, Information, Application, and Technology) related to the architecture.

4) Prioritize

- Actionable items

- Relative business value

- Technical complexity

5) Discover the Optimum Sequence

- Dependencies of actionable items

- Capacity for the organization to absorb change?

6) Develop the Road Map

A Sample MDM Roadmap